One of the strongest applications of generative AI is in understanding the context of text and being able to answer questions about it. The integration of AI into day-to-day processes will be the norm in the applications of the future. It is highly probable that AI will be integrated into every business application developed using TX Text Control. Artificial intelligence applications in digital document processing include document analysis, contract summary, and AI-powered document template generation.

Our role is to provide the interfaces, the typical use cases, and the UI for the integration of AI into the workflow of document processing. That is why we will always provide you with ideas on how to integrate AI into your applications built with TX Text Control.

One of the most interesting applications of AI in document processing is the ability to ask questions about the content of a document. This article shows how to create a generative AI application for PDF documents using TX Text Control and OpenAI functions in C#. The application uses the OpenAI GPT-3 engine to answer questions on the content of a PDF document.

TX Text Control provides a powerful API to create, modify, and process PDF documents. The OpenAI GPT-3 engine is a powerful tool to understand the context of text and to answer questions about it. The integration of both technologies allows developers to create powerful applications that can understand the content of a PDF document and answer questions about it.

Import the PDF

The first step is to import the PDF document into the application. TX Text Control .NET Server for ASP.NET provides a powerful API to import PDF documents. Due to the nature of the OpenAI calls, the length of the content is limited based on the model that is used. This is why we need to create smaller chunks of the PDF document.

The following method opens a PDF document using the Server

╰ TXTextControl Namespace

╰ ServerTextControl Class

The ServerTextControl class implements a component that provide high-level text processing features for server-based applications. class and creates smaller chunks of plain text.

| // split a PDF document into chunks | |

| public static List<string> Chunk(byte[] pdfDocument, int chunkSize, int overlap = 1) | |

| { | |

| // create a new ServerTextControl instance | |

| using (TXTextControl.ServerTextControl tx = new TXTextControl.ServerTextControl()) | |

| { | |

| tx.Create(); | |

| var loadSettings = new TXTextControl.LoadSettings | |

| { | |

| PDFImportSettings = TXTextControl.PDFImportSettings.GenerateParagraphs | |

| }; | |

| // load the PDF document | |

| tx.Load(pdfDocument, TXTextControl.BinaryStreamType.AdobePDF, loadSettings); | |

| // remove line breaks | |

| string pdfText = tx.Text.Replace("\r\n", " "); | |

| // call the extracted chunk creation method | |

| return CreateChunks(pdfText, chunkSize, overlap); | |

| } | |

| } |

| // split a text into chunks | |

| private static List<string> CreateChunks(string text, int chunkSize, int overlap) | |

| { | |

| List<string> chunks = new List<string>(); | |

| // split the text into chunks | |

| while (text.Length > chunkSize) | |

| { | |

| chunks.Add(text.Substring(0, chunkSize)); | |

| text = text.Substring(chunkSize - overlap); | |

| } | |

| // add the last chunk | |

| chunks.Add(text); | |

| return chunks; | |

| } |

The method accepts a specific chunk size and an overlap size. The chunk size should not be too small, because we are trying to select the chunk on the basis of generated keywords that are in accordance with the question that is asked in the PDF document. A good value for the size of a chunk is about 2500 characters with an overlap of 50 characters.

Why an overlap?

Keep in mind that important content may be at the beginning or end of a chunk, and the content may not be long enough to find the right chunk based on keywords. For better visibility, the content should be repeated in the next chunk. Basically, the chunk overlap is the number of characters that adjacent chunks have in common.

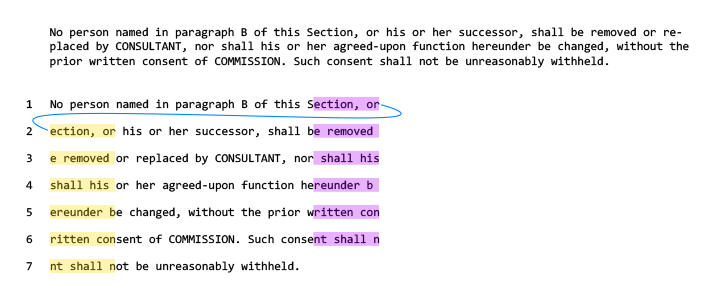

Consider the following sample text, which is divided into chunks of 50 characters with an overlap of 10 characters. The small chunks should not be used for real world applications because they don't contain enough information to find keyword matches and generate answers from the content. The size of the chunk is only used to provide a visual illustration of the concept.

The area marked in purple will be repeated at the beginning of the next chunk (marked in yellow).

Find Matches

After the PDF document is imported and divided into chunks, the next step is to find matches based on the question that is asked. Therefore, OpenAI is used to generate keywords and synonyms from the question used.

This OpenAI call uses function calling to return a list of keywords from the question. This method also uses the TXTextControl.OpenAI namespace, which has been extended with functions and return values for them.

| public static List<string> GetKeywords(string text, int numKeywords = 10) | |

| { | |

| // create a list to store the keywords | |

| List<string> keywords = new List<string>(); | |

| string prompt = $"Create {numKeywords} keywords and synonyms from the following question that can be used to find information in a larger text. Create only 1 word per keyword. Return the keywords in lowercase only. Here is the question: {text}"; | |

| // create a request object | |

| Request apiRequest = new Request | |

| { | |

| Messages = new[] | |

| { | |

| new RequestMessage | |

| { | |

| Role = "system", | |

| Content = $"Always provide {numKeywords} keywords that include relevant synonyms of words in the original question." | |

| }, | |

| new RequestMessage | |

| { | |

| Role = "user", | |

| Content = prompt | |

| } | |

| }, | |

| Functions = new[] | |

| { | |

| new Function | |

| { | |

| Name = "get_keywords", | |

| Description = "Use this function to give the user a list of keywords.", | |

| Parameters = new Parameters | |

| { | |

| Type = "object", | |

| Properties = new Properties | |

| { | |

| List = new ListProperty | |

| { | |

| Type = "array", | |

| Items = new Items | |

| { | |

| Type = "string", | |

| Description = "A keyword" | |

| }, | |

| Description = "A list of keywords" | |

| } | |

| } | |

| }, | |

| Required = new List<string> { "list" } | |

| } | |

| }, | |

| FunctionCall = new FunctionCall | |

| { | |

| Name = "get_keywords", | |

| Arguments = "{'list'}" | |

| } | |

| }; | |

| // get the response | |

| if (GetResponse(apiRequest) is Response response) | |

| { | |

| // return the keywords | |

| return System.Text.Json.JsonSerializer.Deserialize<ListReturnObject>(response.Choices[0].Message.FunctionCall.Arguments).List; | |

| } | |

| return null; | |

| } |

The method uses the OpenAI GPT-3 engine to generate keywords and synonyms from the question, which are then used to find the occurrences on the generated chunks. The idea is to select the best match and send only that chunk to OpenAI to generate the answer based on that content.

The FindMatches method returns a dictionary containing the chunk id sorted by relevance. The relevance is calculated on the basis of the number of how many times the keywords are present in the text of the chunk.

| // find matches in a list of chunks | |

| public static Dictionary<int, double> FindMatches(List<string> chunks, List<string> keywords, int padding = 500) | |

| { | |

| // create a dictionary to store the document frequency of each keyword | |

| Dictionary<string, int> df = new Dictionary<string, int>(); | |

| // create a dictionary to store the results | |

| Dictionary<int, double> results = new Dictionary<int, double>(); | |

| // create a list to store the trimmed chunks | |

| List<string> trimmedChunks = new List<string>(); | |

| // loop through the chunks | |

| for (int i = 0; i < chunks.Count; i++) | |

| { | |

| // remove the padding from the first and last chunk | |

| string chunk = i != 0 ? chunks[i].Substring(padding) : chunks[i]; | |

| chunk = i != chunks.Count - 1 ? chunk.Substring(0, chunk.Length - padding) : chunk; | |

| trimmedChunks.Add(chunk.ToLower()); | |

| } | |

| // loop through the trimmed chunks | |

| foreach (string chunk in trimmedChunks) | |

| { | |

| // loop through the keywords | |

| foreach (string keyword in keywords) | |

| { | |

| // count the occurrences of the keyword in the chunk | |

| int occurrences = chunk.CountSubstring(keyword); | |

| // add the keyword to the document frequency dictionary | |

| if (!df.ContainsKey(keyword)) | |

| { | |

| df[keyword] = 0; | |

| } | |

| // increment the document frequency | |

| df[keyword] += occurrences; | |

| } | |

| } | |

| // loop through the trimmed chunks | |

| for (int chunkId = 0; chunkId < trimmedChunks.Count; chunkId++) | |

| { | |

| // initialize the points | |

| double points = 0; | |

| // loop through the keywords | |

| foreach (string keyword in keywords) | |

| { | |

| // count the occurrences of the keyword in the chunk | |

| int occurrences = trimmedChunks[chunkId].CountSubstring(keyword); | |

| // calculate the points | |

| if (df[keyword] > 0) | |

| { | |

| // add the points | |

| points += occurrences / (double)df[keyword]; | |

| } | |

| } | |

| // add the points to the results | |

| results[chunkId] = points; | |

| } | |

| // return the results sorted by points | |

| return results.OrderByDescending(x => x.Value).ToDictionary(x => x.Key, x => x.Value); | |

| } |

Generate the Answer

After the best match is found, the next step is to generate the answer based on the content of the chunk. The chunk will be sent to OpenAI along with the question and the prompt that follows:

| $"```{chunk}```Your source is the information above. What is the answer to the following question? ```{question}``` |

The complete method GetAnswer is shown in the code below.

| public static string GetAnswer(string chunk, string question) | |

| { | |

| // create a prompt | |

| string prompt = $"```{chunk}```Your source is the information above. What is the answer to the following question? ```{question}```"; | |

| // create a request object | |

| Request apiRequest = new Request | |

| { | |

| Messages = new[] | |

| { | |

| new RequestMessage | |

| { | |

| Role = "system", | |

| Content = "You should help to find an answer to a question in a document." | |

| }, | |

| new RequestMessage | |

| { | |

| Role = "user", | |

| Content = prompt | |

| } | |

| } | |

| }; | |

| // get the response | |

| if (GetResponse(apiRequest) is Response response) | |

| { | |

| // return the answer | |

| return response.Choices[0].Message.Content; | |

| } | |

| // return null if the response is null | |

| return null; | |

| } |

Running the Application

The application is a simple .NET Console App that uses the TX Text Control .NET Server for ASP.NET to import the PDF document and to display the answer generated by OpenAI.

| string question = "Is contracting with other partners an option?"; | |

| //string question = "How will disputes be dealt with?"; | |

| //string question = "Can the agreement be changed or modified?"; | |

| string pdfPath = "Sample PDFs/SampleContract-Shuttle.pdf"; | |

| // load the PDF file | |

| byte[] pdfDocument = File.ReadAllBytes(pdfPath); | |

| // split the PDF document into chunks | |

| var chunks = DocumentProcessing.Chunk(pdfDocument, 2500, 50); | |

| Console.WriteLine($"{chunks.Count.ToString()} chunks generated from: {pdfPath}"); | |

| // get the keywords | |

| List<string> generatedKeywords = GPTHelper.GetKeywords(question, 20); | |

| // find the matches | |

| var matches = DocumentProcessing.FindMatches(chunks, generatedKeywords).ToList().First(); | |

| // print the matches | |

| Console.WriteLine($"The question: \"{question}\" was found in chunk {matches.Key}."); | |

| // print the answer | |

| Console.WriteLine("\r\n********\r\n" + GPTHelper.GetAnswer(chunks[matches.Key], question)); |

A sample output is shown in the following console:

14 chunks generated from: Sample PDFs/SampleContract-Shuttle.pdf

The question: "Is contracting with other partners an option?" was found in chunk 11.

********

No, contracting with other partners is not an option unless prior approval is obtained from the COMMISSION'S Contract Manager. The document specifies that subcontracting work under this Agreement is not allowed without prior written authorization, except for those identified in the approved Fee Schedule. Subcontracts over $25,000 must include the necessary provisions from the main Agreement and must be approved in writing by the COMMISSION'S Contract Manager.

The answer is generated based on the content of the chunk that contains the best match for the question. The answer is a direct quote from the PDF document.

Conclusion

This article shows how to create a generative AI application for PDF documents using TX Text Control and OpenAI functions in .NET C#. The application uses the OpenAI GPT-3 engine to answer questions on the content of a PDF document.

Test it yourself by downloading the sample application from our GitHub repository.