In a previous blog post, we used OpenAI's generative AI to rephrase sentences or to set the tone of a particular sentence at the current input position.

Learn More

ChatGPT and generative AI in general can be used to set the tone of a sentence or to rephrase content by expanding or shortening it. This example shows how to integrate OpenAI's ChatGPT into TX Text Control's document editor.

Integrating OpenAI ChatGPT with TX Text Control to Rephrase Content

In this article we will use the Chat Completions API with the gpt-3.5-turbo base model to generate content with a prompt interface.

Requirements: OpenAI Account

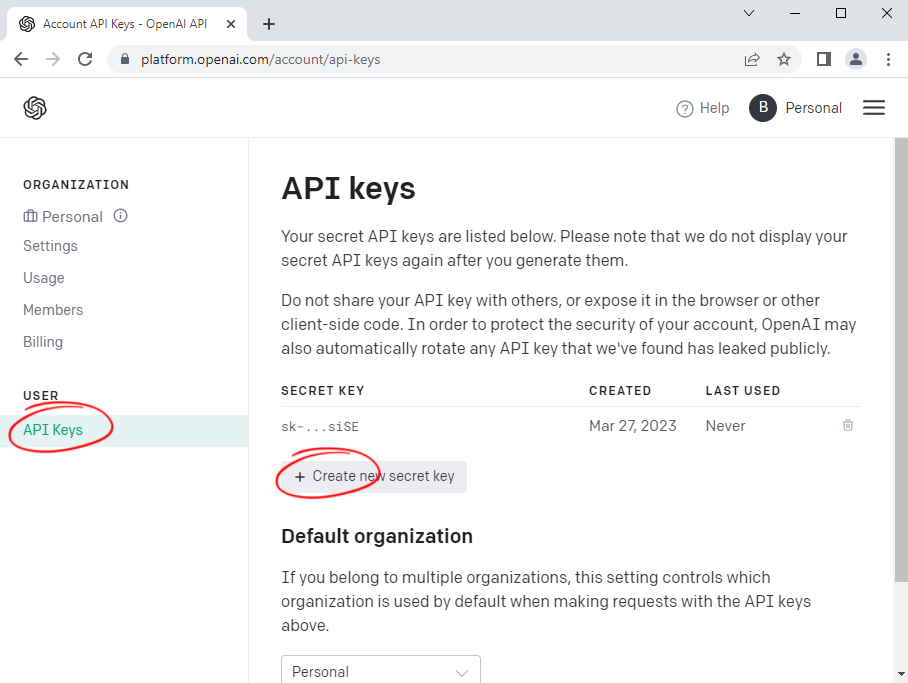

To use this example, you need to create an OpenAI account with a generated API Key.

https://platform.openai.com/signup

Under User -> API Keys, create a new API Key by clicking Create new secret key.

Ribbon UI

In this example, the additional ribbon group is created as an HTML document. It is located in the wwwroot folder and dynamically loaded into the DOM:

| TXTextControl.addEventListener("ribbonTabsLoaded", function (e) { | |

| $.get("/RibbonExtensions/ChatGpt/chatgpt_group.html", function (data) { | |

| $("#ribbonGroupClipboard").after(data); | |

| }); | |

| ); |

The chatgpt_group.html contains the HTML, CSS and JavaScript to send a HttpPost request to the RequestAPI controller method of the ChatGPTController controller.

Calling the Controller

The prompt is sent via an asynchronous HTTP request to the PromptRequest Web API endpoint.

| function requestPrompt() { | |

| const offset = $("#mainCanvas").offset(); | |

| const $spinner = $("#chatgpt-spinner").css({ top: offset.top, left: offset.left, display: "block" }); | |

| const prompt = $("#prompt").val(); | |

| const serviceURL = "ChatGPT/PromptRequest"; | |

| $.ajax({ | |

| type: "POST", | |

| url: serviceURL, | |

| contentType: "application/json", | |

| data: JSON.stringify({ text: prompt }), | |

| success: successFunc, | |

| error: errorFunc | |

| }); | |

| function hideSpinner() { | |

| $spinner.css({ display: "none" }); | |

| } | |

| function successFunc(data) { | |

| TXTextControl.selection.load(TXTextControl.StreamType.HTMLFormat, data); | |

| hideSpinner(); | |

| } | |

| function errorFunc(data) { | |

| console.log(data); | |

| hideSpinner(); | |

| } | |

| } |

Calling OpenAI

The controller method adds a phase to the prompt to return the content in formatted HTML. This prompt is then sent to the OpenAI Chat Completions API.

| [HttpPost] | |

| public string PromptRequest([FromBody] ChatGPTRequest request) | |

| { | |

| var htmlSuffix = ". Create the results in formatted HTML format with h1, p and li elements."; | |

| // Remove a trailing full stop, if present | |

| if (request.Text.EndsWith(".")) | |

| { | |

| request.Text = request.Text.TrimEnd('.'); | |

| } | |

| HttpClient client = new HttpClient | |

| { | |

| DefaultRequestHeaders = | |

| { | |

| Authorization = new AuthenticationHeaderValue("Bearer", Models.Constants.OPENAI_API_KEY) | |

| } | |

| }; | |

| Request apiRequest = new Request | |

| { | |

| Messages = new[] | |

| { | |

| new RequestMessage | |

| { | |

| Role = "system", | |

| Content = "You are a helpful assistant." | |

| }, | |

| new RequestMessage | |

| { | |

| Role = "user", | |

| Content = request.Text + htmlSuffix | |

| } | |

| } | |

| }; | |

| StringContent content = new StringContent( | |

| System.Text.Json.JsonSerializer.Serialize(apiRequest), | |

| Encoding.UTF8, | |

| "application/json" | |

| ); | |

| HttpResponseMessage httpResponseMessage = client.PostAsync( | |

| "https://api.openai.com/v1/chat/completions", | |

| content | |

| ).Result; | |

| if (httpResponseMessage.IsSuccessStatusCode) | |

| { | |

| Response response = System.Text.Json.JsonSerializer.Deserialize<Response>( | |

| httpResponseMessage.Content.ReadAsStringAsync().Result | |

| ); | |

| var plainTextBytes = System.Text.Encoding.UTF8.GetBytes(response.Choices[0].Message.Content); | |

| return System.Convert.ToBase64String(plainTextBytes); | |

| } | |

| return null; | |

| } |

The selection.load

╰ JavaScript API

╰ Selection Object

╰ load Method

Loads text in a certain format into the current selection. method is then used to load the returned HTML into the document.

| TXTextControl.selection.load(TXTextControl.StreamType.HTMLFormat, data); |

You can download the sample project for your own testing from our GitHub repository.