Word processing and document generation is a very complex task and TX Text Control is built to process a single document as fast as possible. TX Text Control implements critical sections that accesses shared resources and must be executed as an atomic action. This implies that multiple threads where TX Text Control is used might wait for each other under very specific circumstances. This implementation makes TX Text Control thread safe, but for specific applications slower.

In order to merge 100s or 1000s of documents in batch processes, a true multi-process implementation is recommended which increases the overall merge performance by up to 300%.

The sample shows how to merge all templates in a folder with data and to export it as Adobe PDF to another folder in a batch process.

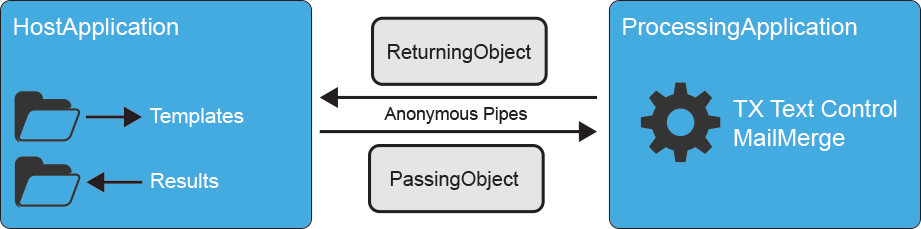

The solution consists of two parts:

- A HostApplication that reads the files from a folder and saves the results to another folder.

- A ProcessingApplication that uses TX Text Control to process each document in a new process.

The HostApplication is calling the MergeDocument method for each loop in a .NET "parallel for each" statement:

| // read all templates | |

| String[] files = Directory.GetFiles(sTemplateFolder); | |

| // loop through all files parallel | |

| Parallel.ForEach(files, (currentFile) => | |

| { | |

| MergeDocument(currentFile, report); // merge template | |

| }); |

In this method, a transportation object is created (PassingObject) that holds the document as a byte array and the merge data. The CallProcessingApp is called with this transportation object which creates a new process and communicates using anonymous pipes.

| private void MergeDocument(string Filename, object Data) | |

| { | |

| // create a new PassingObject that is used to send | |

| // data to the ProcessingApplication using pipes | |

| PassingObject dataObject = new PassingObject() | |

| { | |

| Data = JsonConvert.SerializeObject(Data), | |

| Document = File.ReadAllBytes(Filename) | |

| }; | |

| // call the processing app and pass the data object | |

| ReturningObject returnObject = ParallelProcessing.CallProcessingApp(dataObject); | |

| // create destination folder if it doesn't exists | |

| Directory.CreateDirectory(sResultsFolder); | |

| // write the returned byte array as a file | |

| File.WriteAllBytes(sResultsFolder + "\\" + | |

| Path.GetFileNameWithoutExtension(Filename) + | |

| ".pdf", returnObject.Document); | |

| } |

Basically, the CallProcessingApp method creates a new process, is synchronizing the pipe stream, is sending the PassingObject and waits for the synchronized return object from the process. Then the ReturningObject contains the created PDF document as a byte array.

The ProcessingApplication is referenced by the HostApplication and contains the CallProcessingApp method and the transportation data models. But the application itself is also a console application which clones itself as a new process in order to process the documents. The following code is the Main method that synchronizes the pipe stream in order to retrieve and return the transportation object and to merge the template with the given JSON data using TX Text Control:

| static void Main(string[] args) | |

| { | |

| if (args == null || args.Length < 2) return; | |

| // get read and write pipe handles | |

| // roles are reversed from how the other process is passing the handles | |

| string pipeWriteHandle = args[0]; | |

| string pipeReadHandle = args[1]; | |

| // create 2 anonymous pipes for duplex communications | |

| using (var pipeRead = new AnonymousPipeClientStream(PipeDirection.In, pipeReadHandle)) | |

| using (var pipeWrite = new AnonymousPipeClientStream(PipeDirection.Out, pipeWriteHandle)) | |

| { | |

| try | |

| { | |

| var lsValues = new List<string>(); | |

| // get message from hosting process | |

| using (var sr = new StreamReader(pipeRead)) | |

| { | |

| string sTempMessage; | |

| // wait for "sync message" from the other process | |

| do | |

| { | |

| sTempMessage = sr.ReadLine(); | |

| } while (sTempMessage == null || !sTempMessage.StartsWith("SYNC")); | |

| // read until "end message" from the server | |

| while ((sTempMessage = sr.ReadLine()) != null && !sTempMessage.StartsWith("END")) | |

| { | |

| lsValues.Add(sTempMessage); | |

| } | |

| } | |

| // send value to calling process | |

| using (var sw = new StreamWriter(pipeWrite)) | |

| { | |

| sw.AutoFlush = true; | |

| // send a "sync message" and wait | |

| sw.WriteLine("SYNC"); | |

| pipeWrite.WaitForPipeDrain(); // wait here | |

| PassingObject dataObject = | |

| JsonConvert.DeserializeObject<PassingObject>(lsValues[0]); | |

| ReturningObject returnObject = new ReturningObject(); | |

| try | |

| { | |

| // create a new ServerTextControl for the document processing | |

| using (TXTextControl.ServerTextControl tx = | |

| new TXTextControl.ServerTextControl()) | |

| { | |

| tx.Create(); | |

| tx.Load(dataObject.Document, | |

| TXTextControl.BinaryStreamType.InternalUnicodeFormat); | |

| using (MailMerge mailMerge = new MailMerge()) | |

| { | |

| mailMerge.TextComponent = tx; | |

| mailMerge.MergeJsonData(dataObject.Data.ToString()); | |

| } | |

| byte[] data; | |

| tx.Save(out data, TXTextControl.BinaryStreamType.AdobePDF); | |

| returnObject.Document = data; | |

| } | |

| sw.WriteLine(JsonConvert.SerializeObject(returnObject)); | |

| sw.WriteLine("END"); | |

| } | |

| catch (Exception exc) | |

| { | |

| returnObject.Error = exc.Message; | |

| sw.WriteLine(JsonConvert.SerializeObject(returnObject)); | |

| sw.WriteLine("END"); | |

| } | |

| } | |

| } | |

| catch | |

| { | |

| } | |

| } | |

| } |

Based on the sample templates in this demo, the normal processing of 100 templates takes about 45 seconds on a 16 core CPU while the parallel processing takes 14 seconds which is about 3 times faster than the normal processing.

The concept is very modular and flexible. By adding members to the transportation object, you could pass settings such as another return file format other than PDF or specific merge settings.

You can download this sample from our GitHub repository and try this on your own. This sample uses our Windows Forms version, but the concept is valid for all types of applications including WPF and ASP.NET.