LLMs and generative AI can be used in many aspects of document processing, including content generation, analysis of documents such as contracts, or classification of documents. Typical use cases include summarizing documents or extracting key facts from a contract or agreement. Semi-automated processes in legal workflows will help paralegals and attorneys analyze contracts and find errors or loopholes in agreements based on appropriate prompts.

Another typical use case is document classification. An automated process that determines whether a document is an invoice, quote, or contract can help route documents to the right workflows. In this example, we will use TX Text Control to import the text of a PDF document and then use the OpenAI API for analysis.

The idea is to assign a single tag to incoming documents. We are going to use a predefined set of tags and let OpenAI do the work for us.

OpenAI Namespace

To make the request to the OpenAI API, we will use the TX Text Control namespace TXTextControl.OpenAI, which encapsulates all the classes needed to make a request.

| namespace TXTextControl.OpenAI { | |

| public class ChatGPTRequest | |

| { | |

| public string Text { get; set; } | |

| public string Type { get; set; } | |

| } | |

| public class RequestMessage | |

| { | |

| [JsonPropertyName("role")] | |

| public string Role { get; set; } | |

| [JsonPropertyName("content")] | |

| public string Content { get; set; } | |

| } | |

| public class Request | |

| { | |

| [JsonPropertyName("model")] | |

| public string Model { get; set; } = "gpt-3.5-turbo"; | |

| [JsonPropertyName("max_tokens")] | |

| public int MaxTokens { get; set; } = 100; | |

| [JsonPropertyName("messages")] | |

| public RequestMessage[] Messages { get; set; } | |

| } | |

| public class Response | |

| { | |

| [JsonPropertyName("id")] | |

| public string Id { get; set; } | |

| [JsonPropertyName("created")] | |

| public int Created { get; set; } | |

| [JsonPropertyName("model")] | |

| public string Model { get; set; } | |

| [JsonPropertyName("usage")] | |

| public ResponseUsage Usage { get; set; } | |

| [JsonPropertyName("choices")] | |

| public ResponseChoice[] Choices { get; set; } | |

| } | |

| public class ResponseUsage | |

| { | |

| [JsonPropertyName("prompt_tokens")] | |

| public int PromptTokens { get; set; } | |

| [JsonPropertyName("completion_tokens")] | |

| public int CompletionTokens { get; set; } | |

| [JsonPropertyName("total_tokens")] | |

| public int TotalTokens { get; set; } | |

| } | |

| public class ResponseChoice | |

| { | |

| [JsonPropertyName("message")] | |

| public ResponseMessage Message { get; set; } | |

| [JsonPropertyName("finish_reason")] | |

| public string FinishReason { get; set; } | |

| [JsonPropertyName("index")] | |

| public int Index { get; set; } | |

| } | |

| public class ResponseMessage | |

| { | |

| [JsonPropertyName("role")] | |

| public string Role { get; set; } | |

| [JsonPropertyName("content")] | |

| public string Content { get; set; } | |

| } | |

| public class ChatGPTResponse | |

| { | |

| public string Id { get; set; } | |

| public Choice[] Choices { get; set; } | |

| } | |

| public class Choice | |

| { | |

| public string Text { get; set; } | |

| public int Index { get; set; } | |

| } | |

| public class Constants | |

| { | |

| public static string OPENAI_API_KEY = ""; | |

| } | |

| } |

As an OpenAI model, we are going to use gpt-3.5-turbo, and the endpoint that is used is the Completions API.

The instructions or system prompt defines the model's behavior or personality.

| Request apiRequest = new Request | |

| { | |

| Messages = new[] | |

| { | |

| new RequestMessage | |

| { | |

| Role = "system", | |

| Content = "You are a helpful assistant in the classification of documents." | |

| }, | |

| new RequestMessage | |

| { | |

| Role = "user", | |

| Content = requestPrompt | |

| } | |

| } | |

| }; |

Prompt Engineering

The most difficult part is to find the right prompt with the exact description to instruct the model to generate the output you need. Giving precise instructions so that OpenAI does not invent additional tags is the key to this prompt. Below is the prompt used for this scenario.

| string requestPrompt = "You will need to classify the documents into tags. "; | |

| requestPrompt += "Possible tags are: 'invoice', 'receipt', 'contract', 'quotation', 'agreement', 'other'. "; | |

| requestPrompt += "The tags are keywords that summarize the document's content. "; | |

| requestPrompt += "For each tag provided, you must specify the probability that the document belongs to that tag. "; | |

| requestPrompt += "The tags will be provided on a list. "; | |

| requestPrompt += "You must limit yourself to the supplied tags. "; | |

| requestPrompt += "You must not add other tags that are not in the list. "; | |

| requestPrompt += "All of the tags must be included when you reply. "; | |

| requestPrompt += "Only return the tags and no additional text. "; | |

| requestPrompt += "The following is a sample of the output you must return. You must return the same list, but replace the symbol 'RANKING' with the associated probability: "; | |

| requestPrompt += "invoice:RANKING, receipt:RANKING, contract:RANKING, quotation:RANKING, agreement:RANKING, other:RANKING "; | |

| requestPrompt += "The following is the document to analyze: "; |

The idea is to provide a list of possible tags and instruct the output to use only those tags with a probability value.

Sample Console Application

The following code shows a sample console application that imports a PDF document and sends the text to the OpenAI API. The response is then parsed and the tags are extracted. The Contents.

╰ DocumentServer.PDF Namespace

╰ Contents.Lines Class

The Lines class implements functionality to find DocumentServer.PDF.Contents.ContentLine objects in a PDF document. class is used to import the text lines of a PDF document in the method ExtractDocumentText.

| using TXTextControl.DocumentServer.PDF.Contents; | |

| const int MAX_TOKENS = (3897 / 4); | |

| // read filename from user input | |

| Console.WriteLine("Enter the path to the document to classify:"); | |

| string filePath = Console.ReadLine(); | |

| // return if file does not exist | |

| if (!System.IO.File.Exists(filePath)) | |

| { | |

| Console.WriteLine("File does not exist."); | |

| return; | |

| } | |

| var documentText = ExtractDocumentText(filePath); | |

| // Limit to max tokens | |

| if (documentText.Length > MAX_TOKENS) | |

| { | |

| documentText = documentText.Substring(0, MAX_TOKENS); | |

| } | |

| // Classify document | |

| string results = OpenAIChatHelper.ClassifyDocument(documentText); | |

| // Output results to console | |

| Console.WriteLine(results); | |

| Console.WriteLine("Highest probability: " + GetHighestRankingEntry(results)); | |

| static string ExtractDocumentText(string filePath) | |

| { | |

| var documentText = ""; | |

| Lines lines = new Lines(filePath); | |

| foreach (ContentLine line in lines.ContentLines) | |

| { | |

| documentText += line.Text; | |

| } | |

| return documentText; | |

| } | |

| static string GetHighestRankingEntry(string rankings) | |

| { | |

| var entries = rankings.Split(',') | |

| .Select(entry => entry.Trim().Split(':')) | |

| .ToDictionary(pair => pair[0], pair => double.Parse(pair[1])); | |

| var highestEntry = entries.Aggregate((l, r) => l.Value > r.Value ? l : r); | |

| return $"{highestEntry.Key}"; | |

| } |

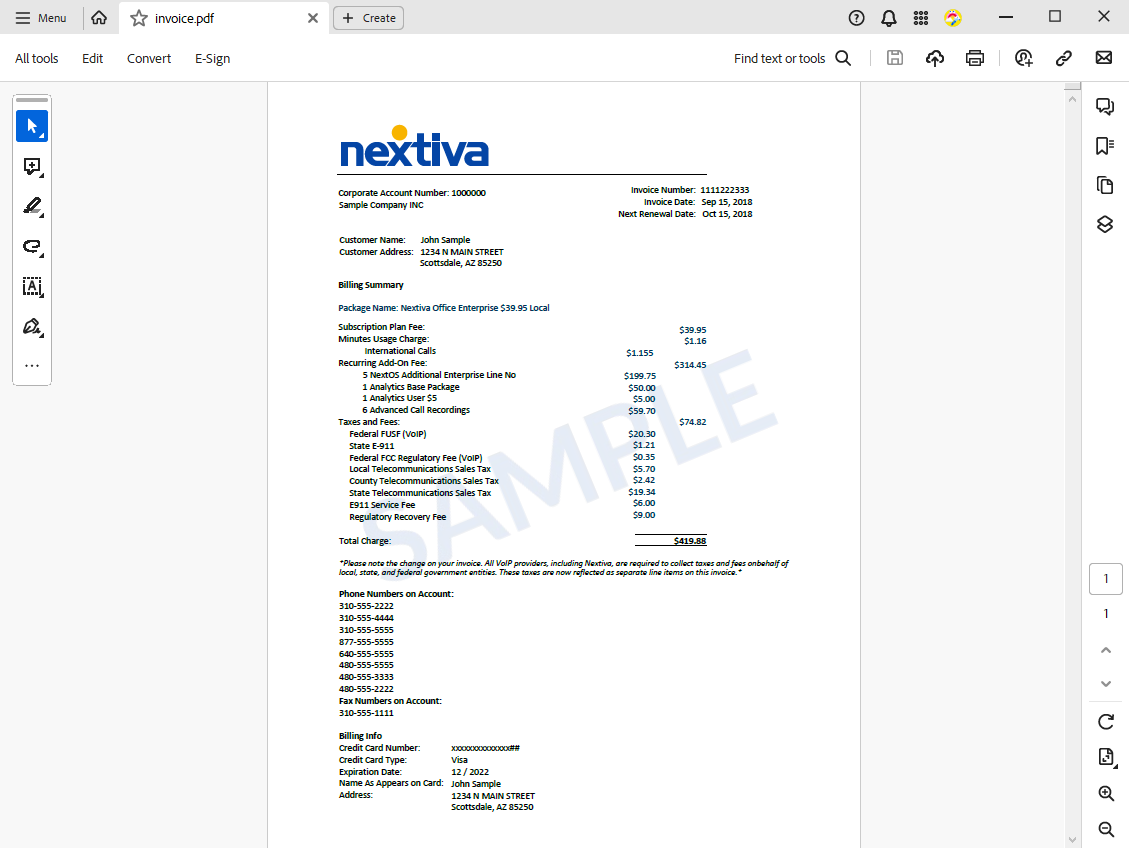

The sample comes with a set of sample PDFs randomly collected from a Google search. The following screenshot shows the PDF in Acrobat Reader.

When starting the application, the user is prompted for a document path to analyze.

Enter the path to the document to classify:

Documents\invoice.pdf

After entering the document name, the document is imported and sent to OpenAI for analysis. The results will be written to the console.

Enter the path to the document to classify:

Documents\invoice.pdf

invoice:0.8, receipt:0.2, contract:0, quotation:0, agreement:0, other:0

Highest probability: invoice

OpenAI returns exactly the string we requested with all tags and the probability. In this case, OpenAI has determined that the input document is an invoice, which is perfectly correct.

Let's try another document:

Enter the path to the document to classify:

Documents\contract.pdf

invoice:0.1, receipt:0.05, contract:0.65, quotation:0.05, agreement:0.05, other:0.1

Highest probability: contract

Again, OpenAI has determined that the input document is a contract, which is correct.

Conclusion

Using OpenAI and TX Text Control, we have created a simple document classification tool that can be used to route documents to the right workflows. The key to this solution is the prompt that is used to instruct the model to generate the output we need.

Download the sample from GitHub and test it with your own documents.